Future Now

The IFTF Blog

Bodies of Data

Who are we through the eyes of algorithms?

Our identities have always been dynamic. We have to reconcile who we think we are with who we are in the eyes of our families and communities—and in the future, in the eyes of machines.

Going forward, we’ll have to reconcile our identities with the massive amounts of data our body area networks produce, and what powerful algorithms can infer from that data. Computing devices within and around our bodies will contribute to stunningly detailed portraits of us, offering new views into who we are. These bodies of data will challenge, reinforce, and transform our existing identities, and create entirely new kinds of identity we never imagined.

More than a decade ago, an episode of the TV show “The King of Queens” featured a subplot where a character’s TiVo “thought he was gay”—the device’s algorithm kept offering him programs with LGBTQ themes. This plot-point was based on the real-life experience of a show’s producer’s friend. While some aspects of that episode may or may not have aged well, the idea that our electronics have an opinion about who we are is more relevant today than ever. As we move through the Web and about our lives at home, in cars, and on city streets, we generate massive amounts of data.

And marketers use these data to build profiles that capture preferences and needs, define markets, and provide insight for new products and services.

At the center of all this are algorithms that use various data points to infer aspects of our identities, such as gender, race, religion, religion or sexual orientation. For instance, going to Google’s ad settings page allows you to see at least a partial view of the profile that the software giant has assembled on you. You can see the assumptions it’s made about your age, gender, and interests. These profiles are often accurate—but they’re far from perfect. Today it might be reassuring when there’s a discrepancy between who you think you are and who Google thinks you are. We assume that any discrepancy is the fault of an imperfect algorithm. Maybe your kid sister’s searching spree was mistakenly attributed to you, skewing the algorithm’s view of who you are.

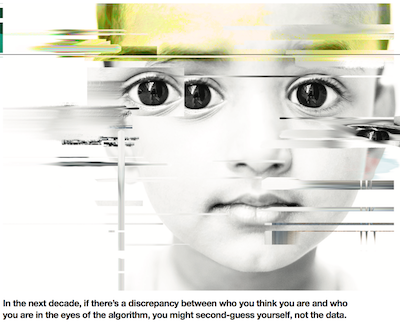

But the technology is getting better all the time. With only our Web activity (and in some cases purchasing and demographic data), algorithms can already perform stunning acts of inference. As our body area networks get more sophisticated, we’ll be adding things like biometric and body movement data to the mix—and the kinds of algorithmic inferences that will be possible might seem downright telepathic. In the next decade, if there’s a discrepancy between who you think you are and who you are in the eyes of the algorithm, you might second-guess yourself, and not the data. Say an algorithm profiles you as older than you actually are; you may think, “I must look old for my age,” and internalize that image of yourself.

And the possibilities for what our body language might reveal are nearly endless. What if you always assumed you came off as insecure, but an algorithm analyzing your gait identified you as fearless? What if you believe yourself to be utterly calm, but your typing patterns indicate you’re a ball of nerves? More troublingly, what if your data revealed that your heart-rate spikes and your muscles tense when you interact with people who are of a different race?

On an individual level, these discoveries could be earth-shattering or utterly inconsequential, devastating or delightful, depending on who you are and what you learn. But for society as a whole, the impact is almost certain to be dramatic.

It will likely cause us to reexamine how we, as a society, understand and address issues such as racism and sexism. It will alter how we understand creativity and build professional networks.

These algorithmic identities could have more subtle impacts, as well. For instance, observing the way a marketing algorithm defines gender could reveal shifts in how people whose biological sex is male or female, on average, behave. Because an algorithm’s understanding of what constitutes male or female behavior is more fluid, it may update its definitions before society does.

Robin James, associate professor of philosophy at the University of North Carolina at Charlotte, puts it this way: “Demanding conformity to one and only one feminine ideal is less profitable for Facebook than it is to tailor their ads to more accurately reflect my style of gender performance. They would much rather send me ads for the combat boots I probably will click through and buy than the diapers or pregnancy tests I won’t.”

Basically, a marketing algorithm only cares about these categories to the extent that they help drive clicks and sales, so it will update how it defines gender with every new piece of information it gathers. As the data capture widens to include things such as emotional response data, algorithms will be able to anticipate, track, and adjust to social or market changes before the rest of us are even aware of it.

FINDING YOURSELF IN YOUR DATA

While this convergence of body area network data and powerful algorithms will almost certainly alter important established categories of identity, it will also create completely new categories of identity, based on things such as interests, preferences, or even the different ways our bodies and minds work. People in the Quantified Self movement have pioneered finding themselves in their data. Among the earliest and most enthusiastic adopters of wearable sensor technology, many in the movement created spreadsheets of data to surface patterns and look for clues about their body’s, their mind’s, maybe even their soul’s personal “operating system.”

For example, many quantified selfers compile data on when they slept and for how long, then cross-reference with data about their mood or their productivity. They’ve formed communities and even self-organized studies with people engaged in similar inquiry.

A powerful driver of the movement is to move beyond generalized recommendations to uncover more nuanced insights that help you, and people like you, to be “better” at whatever you’re trying to accomplish—be it productivity, compassion, aging well, or athleticism.

As body area network data collection and analysis become autmated and awareness of their potential grows, we’re likely to see much larger swathes of the population begin to find meaningful identities in their data. Going forward, we’re likely to see the kinds of identities people discover, and what they want from them, become much more diverse. And for some, they’ll find the new affinities they share with other people become more meaningful to them than traditional social categories such as religion or sexual orientation.

ALGORITHMS OPEN AND CLOSE DOORS

The promise of this future is perfectly personalized experiences. Instead of relying on crudely and narrowly defined markers of identity, the state, the market, and other people will have new categories that lend more, or at least different, insight into who we are. And these will be used to give us greater personalization in everything from entertainment to education, from relationships to medicine, from security to safety.

But these same algorithmic identities could also direct our lives in important ways. “The algorithms don’t tell you what to do or not to do,” James writes, “but open specific kinds of futures for you.” Take an algorithmic identity we’re all familiar with: the credit score. Our credit scores can provide or deny us access to a home, a car, even certain jobs. James speculates that in the future we’re moving toward, we’ll have a credit score for everything.

On some level, this is a good thing—people will be given opportunities based on a more accurate understanding of who they are. An algorithm, for instance, would be less likely than a human to deny someone a loan or job opportunity because of their physical appearance. Indeed, we already have companies like JumpGap that attempt to take human bias out of the hiring process by using software to recommend candidates. A person whose biological identity is female, but whose gender expression is male, for instance, might simply be male to an algorithm, and therefore, it would give him information, experiences, and opportunities designed for a male audience that might be denied to him in person.

In James’ words, “gender [could] become even less and less connected to [biological] sex, but more and more explicitly about privilege and [how we interact with] institutions.” That’s to say, this kind of identity-based targeting will benefit specific individuals by reducing bias based on visual appearance, but it doesn’t touch, or may even reinforce, the larger social status quo that creates these disparities in the first place.

For instance, studies have shown that women are presented with fewer online ads for high-paying jobs than men are, and that black people are shown more ads for legal services. This probably isn’t because someone explicitly programmed in such biases, but, instead, the bias is there because larger social inequities exist that make it more likely that black people would need legal services, or that women would be less likely to apply for certain high-paying jobs. And with substantial research showing that people often internalize the images of themselves they’re presented with, the consequences could be seriously empowering or disempowering, depending on who you are and what images of yourself you’re being shown.

In addition, we’re likely to see new categories of advantage or disadvantage emerge that transcend categories such as race and gender. As this future unfolds, these issues could become major rallying points for activism.

In the next decade, algorithms reading our body area networks will give us even more information to integrate into our sense of self and make our identities more dynamic than ever. They will be able to tell us about how we, as individuals and as a society, are changing. And they can be used to reinforce the status quo, or to push for change that’s truly transformative and radical. However they are used, bodies of data together with algorithms will shape who we think we are—and who we will become.

FUTURE NOW—The Complete New Body Language Research Collection

FUTURE NOW—The Complete New Body Language Research Collection

The New Body Language research is collected in its entirety in our inaugural issue of Future Now, IFTF’s new print magazine.

Most pieces in this issue focus on the human side of Human+Machine Symbiosis—how body area networks will augment the intentions and expressions that play out in our everyday lives. Some pieces illuminate the subtle, even invisible technologies that broker our outrageous level of connection—the machines that feed off our passively generated data and varying motivations. Together, they create a portrait of how and why we’ll express ourselves with this new body language in the next decade.

For More Information

For more information on the Tech Futures Lab and our research, contact:

Sean Ness | sness@iftf.org | 650.233.9517